🎤 Full Interview: Robert Ta, Co-founder @ Epistemic Me

"You can’t build personalized AI—or aligned AI—without modeling what people believe about themselves and their world."

Founder Story & Vision

Who they are & what they’re building

-

Robert Ta is the co-founder of Epistemic Me, an open-source AI infrastructure startup helping AI systems become belief-aware. The SDK helps developers build agents that understand a user’s worldview—not just their clicks.

Why now & what’s the big bet

-

Current personalization is surface-level. Robert’s bet? Future-ready AI must reason through the lens of user beliefs: what they think, value, and how those ideas shift over time. It’s the next layer of trust and alignment in AI design.

🧩 Real-World Use Cases

How it works in the wild

-

Don’t Die App: Bryan Johnson’s AI coach uses Epistemic Me to tailor advice based on user belief systems. Instead of generic advice, it tailors suggestions to each user’s worldview (e.g., whether they believe fasting is effective or supplements are essential).

-

Belief Drift Detection: Tracks how user confidence or mindset evolves over time. These changes inform future recommendations.

-

Mirror Chat: A coming feature to reflect the user’s core beliefs back to them for deeper self-awareness.

What you’ll learn:

-

How Robert and team tackle belief drift and uncertainty in long-term personalization

-

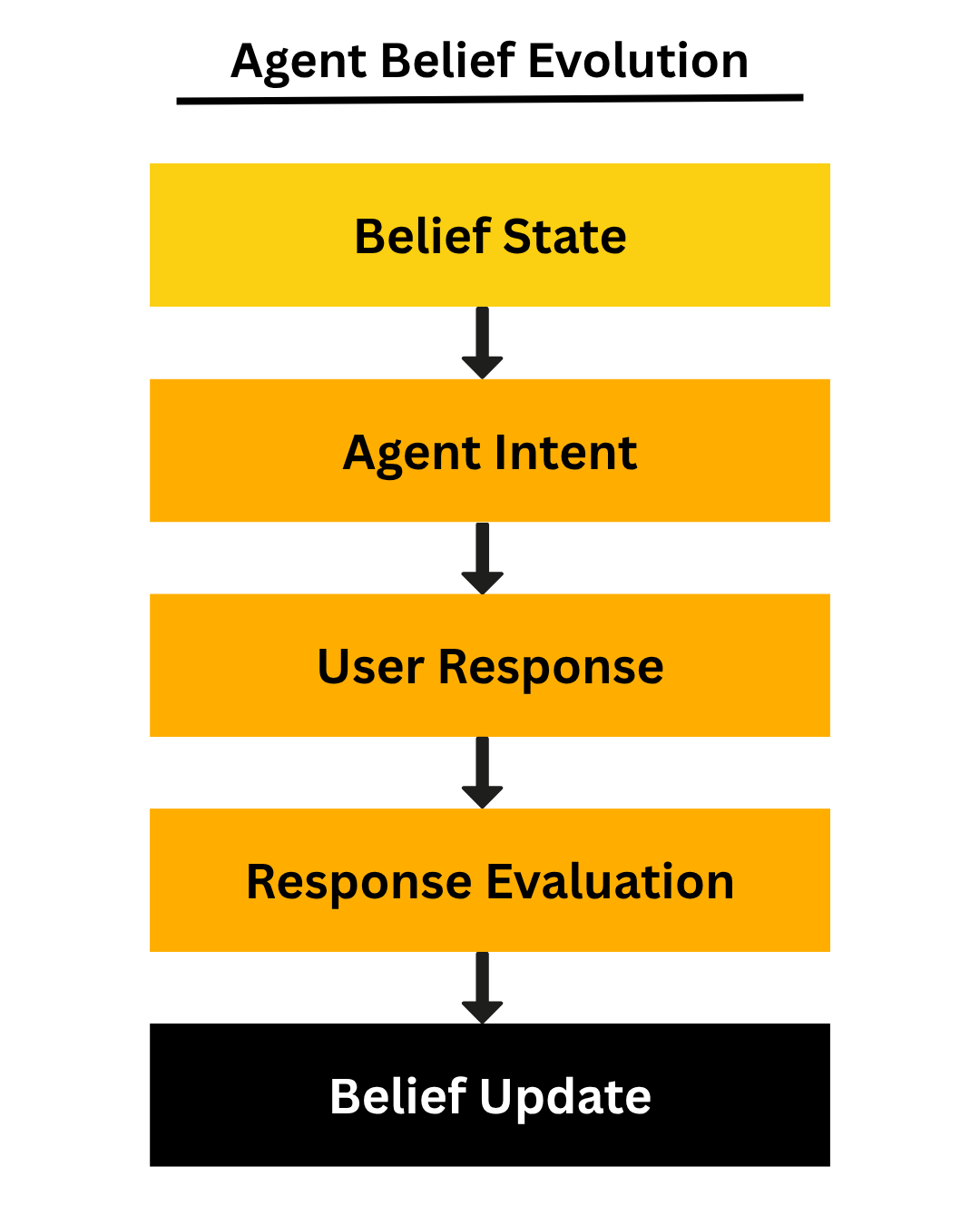

A framework for building belief-aware agents using observation, intent modeling, and memory

-

Why shared values between user and AI are non-negotiable for long-term alignment

-

How to evaluate AI systems based on belief congruence and not just surface metrics

Some Takeaways:

-

Model Beliefs Early: Don’t treat personalization as an afterthought. Build your AI agent to explicitly represent user beliefs.

-

Use Layered Memory: Incorporate multiple memory layers (working, episodic, semantic) into your architecture. Store recent context, key past events, and stable beliefs separately.

-

Embrace Epistemic Evals: Traditional metrics (accuracy, clicks) aren’t enough for personal AI. Measure your agent on belief-congruence: is it making suggestions that match the user’s worldview? Build “epistemic evaluations” that ask: “Did this response fit the user’s mental model?” Robert’s team even built a feedback loop with layers like Context (beliefs+past) → Output → Evaluation. Focus on empathy: success is when users feel the AI understood them, not just when they click a link.

Belief-aware agents use feedback loops like this to update mental models and align with users over time.

-

Guard Against Hallucinations: Anchor your AI’s responses in validated content, especially in high-stakes domains like health.

-

Prioritize Trust: Remember the Golden Rule of personalization: “Personalization isn’t just nice to have. It’s necessary for trust.”

-

Iterate in Public & Leverage Open-Source: Building belief-based AI is hard work. Robert advocates open-source collaboration to iterate and learn.

Epistemic Me is still early, but Robert’s vision is clear: AI assistants that truly “know us” require knowing our mental context. For founders and builders, the lesson is to treat beliefs as data. Building this into your product – as the Epistemic Me team is doing in the Don’t Die app – may be the key to unlocking hyper-personalization and lasting alignment with users.

In this episode, we cover:

00:00 – Intro: Fixing AI Personalization

04:24 – Belief Modeling in AI

07:40 – Don’t Die App Case Study

10:05 – Understanding Belief Drift

14:20 – Building AI Memory

18:11 – Epistemic vs. Standard Evals

21:45 – Trust & Shared Values

24:15 – Guardrails for AI Agents

28:38 – Why Open Source Matters

31:50 – Beliefs as User Data

36:05 – AI for Mental Health

40:20 – Personal AI vs. Human Support

44:35 – Can AI Be Better Than People?

48:10 – The “Mental Health Gym”

52:25 – Hustle vs. Health as a Founder

56:40 – The Healthcare Experience Gap

01:00:55 – What’s Broken in US Healthcare

01:05:10 – Closing the AI Care Gap

01:09:25 – Final Take on AI Alignment

For inquiries about sponsoring the podcast, email david@thehomabase.ai

Find Case Studies of all other episodes here.