We envision a future where AI is seamlessly woven into every product and service, creating experiences that are both magical and safe. At Adaline, we are building the infrastructure that empowers world-class product and engineering teams to collaboratively create, evaluate, and monitor the next generation of AI-powered applications.

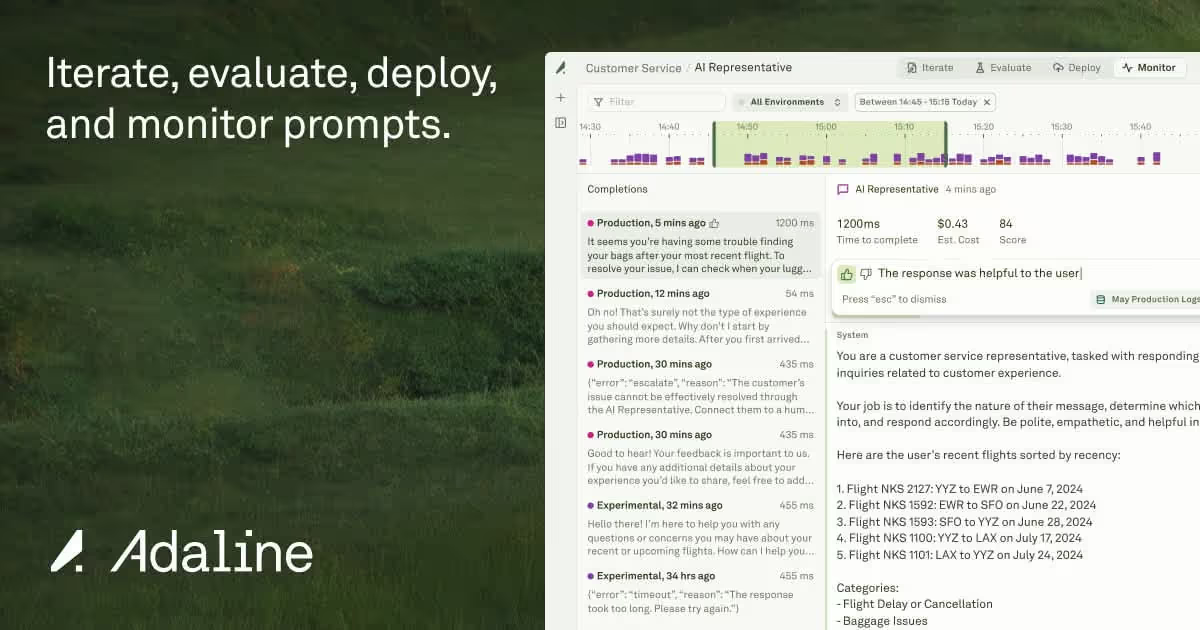

Our platform stands at the crossroads of innovation and reliability, providing tools to iterate on large language model prompts, run comprehensive evaluations, and ensure operational excellence through real-time monitoring. By facilitating deeper collaboration and engineering precision, we aim to unlock the full potential of AI while maintaining the highest standards of safety and performance.

As we grow, our commitment is to foster a vibrant ecosystem where knowledge and technology converge — inspiring developers, researchers, and enterprises to build AI solutions that are not only powerful but responsibly designed for the future.

Our Review

When we first explored Adaline's platform, what caught our attention wasn't just another AI tool — it was their laser focus on solving the real-world challenges of building and scaling AI applications. As veterans in the AI space, we've seen countless platforms come and go, but Adaline's approach feels refreshingly practical.

Impressive Scale for a Newcomer

Despite being fresh on the scene in 2024, Adaline's platform is already handling some serious volume: over 200 million API calls and 5 billion tokens daily. That's not just numbers — it's a testament to their infrastructure's robustness. With 99.998% uptime, they're delivering enterprise-grade reliability from day one.

Where It Really Shines

The platform's collaborative prompt engineering features are a standout. We've seen too many teams struggle with versioning and tracking changes across different prompts, and Adaline's solution feels like a breath of fresh air. Their evaluation toolkit, complete with LLMs-as-judges and custom JavaScript evaluators, brings much-needed rigor to prompt assessment.

What's particularly clever is how they've integrated monitoring with iteration. You can track everything from usage patterns to costs in real-time, then use that data to refine your prompts — it's a feedback loop that actually makes sense.

Room for Growth

While Adaline's technical foundation is solid, we'd love to see more case studies and detailed documentation of their success stories. The platform supports over 300 AI models, which is impressive, but newcomers might need more guidance on best practices for each.

Who It's Perfect For

If you're running a serious AI development operation — whether at a startup or enterprise level — and need to collaborate on, evaluate, and monitor LLM applications, Adaline hits the sweet spot. It's especially valuable for teams that need to bridge the gap between technical and non-technical stakeholders in their AI initiatives.

While they're still new to the market, their focus on solving real operational challenges in AI development makes them a compelling choice for teams ready to scale their AI applications professionally.

Feature

Iterate and refine prompts collaboratively

Comprehensive prompt evaluation toolkits including LLMs-as-judges, latency metrics, custom evaluators

Monitor usage, latency, cost, and health of AI applications

Create datasets from logs, CSV uploads, and live interactions

Automatic versioning and rollback of prompts

Integration with leading AI providers

Content validation for format, length, and quality compliance