We envision a future where building and scaling AI applications is seamless and accessible to all companies, regardless of their size or complexity. Cerebrium is dedicated to transforming the AI infrastructure landscape by removing the burdens of management and inefficiency, enabling creators to focus solely on innovation and impact.

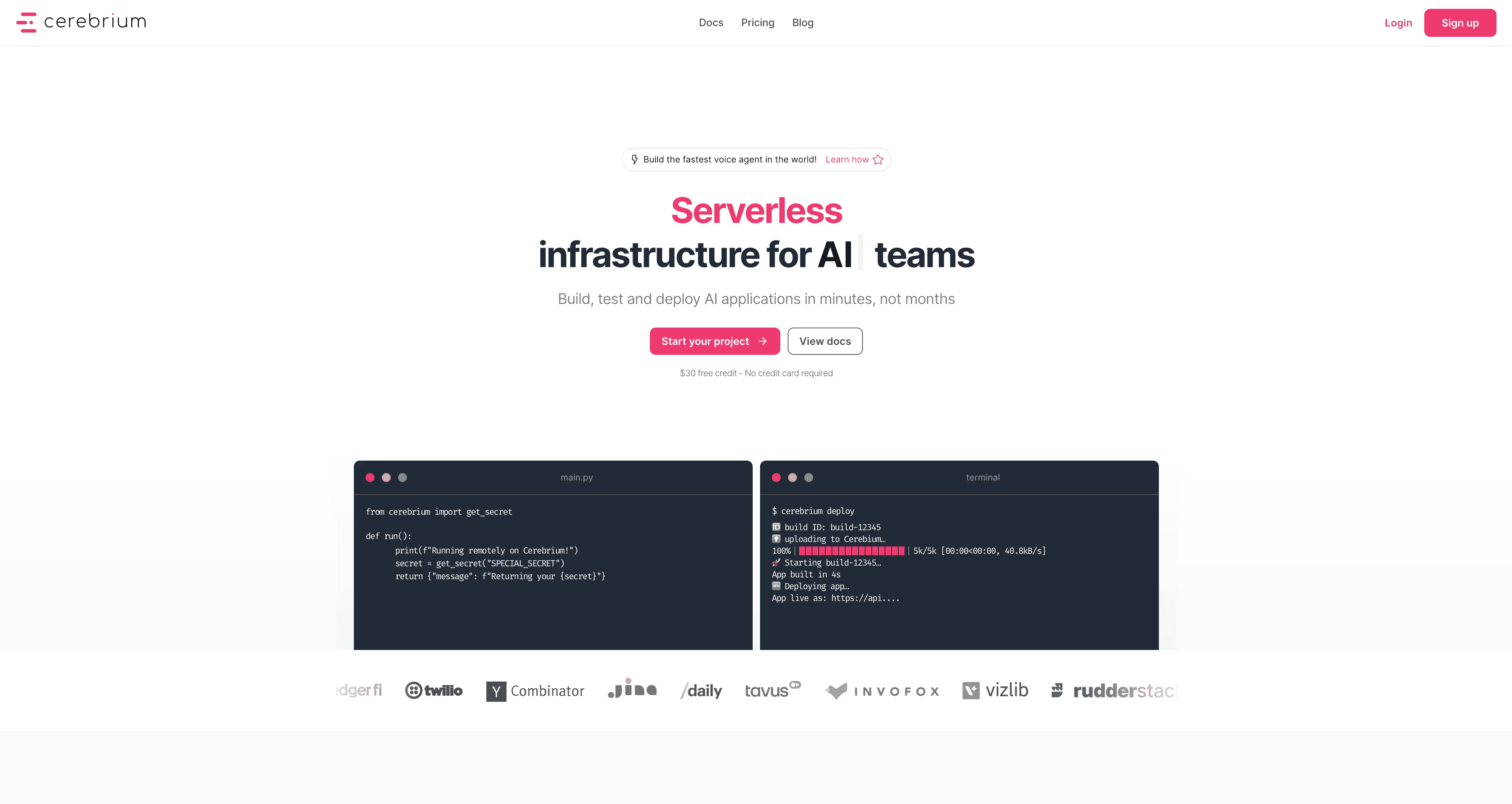

By delivering a serverless GPU cloud platform that abstracts away complexity such as cold starts, autoscaling, and orchestration, we empower engineers to deploy high-performance AI applications globally with unprecedented ease and cost-effectiveness. Our technology drives meaningful change in how AI products scale, perform, and comply with evolving regulatory demands.

At Cerebrium, we are committed to building the foundation for the next generation of AI-enabled experiences that will advance industries, enhance user interactions, and unlock new potentials worldwide through intelligent infrastructure and scalable innovation.

Our Review

We've been watching Cerebrium since its Cape Town origins, and honestly, we're impressed by how they've tackled one of AI's biggest headaches: GPU infrastructure that actually makes sense. While most developers are still wrestling with cold starts and scaling nightmares, these folks built something refreshingly different.

The Pay-Per-Second Revolution

Here's what caught our attention first: Cerebrium charges by the second, not by the hour or month. That might sound like a small detail, but it's huge when you're running AI workloads that spike unpredictably. No more paying for idle H100s sitting around doing nothing.

The serverless approach means your models scale from zero to thousands of requests automatically. We've seen this work beautifully for companies like Tavus and Deepgram, who need that kind of elastic performance without the infrastructure headache.

Where They Really Shine

The platform supports everything from T4s to the latest H100s, plus AWS's Trainium and Inferentia chips. That flexibility matters when you're experimenting with different models or optimizing costs. The multi-region deployment is another smart move—compliance teams love having data residency options.

We particularly like their streaming and WebSocket endpoints. Building real-time AI apps shouldn't require reinventing networking, and Cerebrium gets that. Their observability tools are solid too, giving you the monitoring you need without overwhelming dashboards.

The $8.5M Reality Check

Google's Gradient Ventures led their recent seed round, which tells you something about the technical credibility here. When Google's AI-focused fund backs an infrastructure play, they've usually done their homework on the performance claims.

That said, we're curious to see how they handle the enterprise scaling challenge. Moving from impressive startups to Fortune 500 deployments is where many promising infrastructure companies hit turbulence. The early client roster looks promising, but the real test comes with bigger, more complex workloads.

Feature

Serverless cloud platform for GPU-based machine learning workloads

Auto-scaling from zero to thousands of requests

Multi-region deployment

Asynchronous job processing

Batch request handling

Supports multiple GPU types (T4, A10, A100, H100, Trainium, Inferentia)

Secrets management

Native streaming and WebSocket endpoints

Open telemetry observability

CI/CD pipeline integration

Optimized for performance, reliability, speed, and data residency compliance