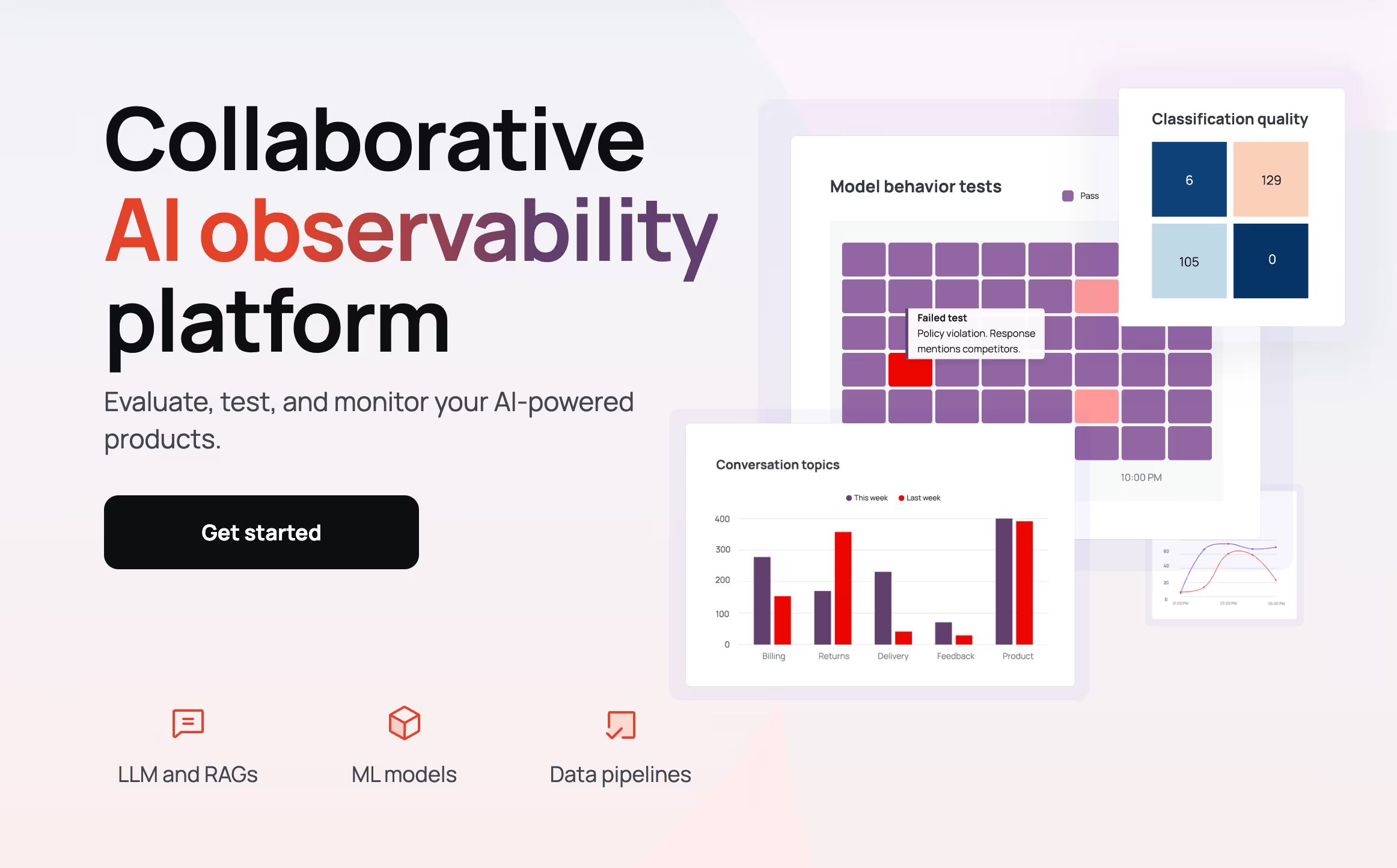

We envision a future where AI systems are transparent, reliable, and seamlessly integrated into the fabric of decision-making across industries. At Evidently AI, our mission is to set a new standard for monitoring machine learning models, empowering enterprises to trust and operate their AI systems with unprecedented accountability and safety.

By developing open-source technologies and comprehensive testing frameworks, we enable data science teams to continuously assess model performance and detect risks before they impact real-world outcomes. Our commitment is to create tools that not only track AI behavior but also unlock the potential for responsible innovation at scale.

Our Review

We've been keeping an eye on Evidently AI since they emerged from Y Combinator in 2021, and honestly, they've impressed us with how they've tackled one of ML's thorniest problems. While everyone's racing to build the next flashy AI model, Evidently's founders Elena Samuylova and Emeli Dral decided to solve the unsexy but critical challenge of keeping those models running smoothly in production.

Their timing couldn't have been better. As more companies moved from ML experiments to actual production systems, the need for proper monitoring became painfully obvious.

What Makes Them Stand Out

The open-source approach is what really caught our attention. Instead of building another black-box enterprise solution, Evidently created a Python library that data scientists can actually peek under the hood of. We've seen too many monitoring tools that feel like mysterious magic boxes—this one lets you understand exactly what's happening.

Their expansion into LLM and AI agent testing shows they're not just following trends but anticipating where the industry's headed. Testing conversational AI and multi-step workflows isn't just nice-to-have anymore; it's becoming essential as these systems get deployed at scale.

The Real-World Impact

What impressed us most is their case study database—over 650 real ML deployments across industries. That's not just marketing fluff; it's genuine proof that teams are using these tools to solve actual problems. We've noticed that companies with solid monitoring tend to catch issues weeks or months before their competitors do.

The fact that enterprise data science teams can start with the open-source version and scale up makes this accessible to organizations that aren't ready to commit to expensive enterprise contracts right away.

Who Should Pay Attention

If you're running ML models in production—especially if you've ever woken up at 3 AM because your model started making weird predictions—Evidently AI deserves a serious look. They've built something that feels like it was designed by people who've actually dealt with production ML headaches, not just PowerPoint presentations about them.

The founders' backgrounds (Samuylova's enterprise ML experience at Yandex, Dral's hands-on ML course creation) give us confidence they understand both the technical and business sides of this challenge.

Feature

Open-source ML monitoring tool for lifecycle evaluation and testing

Supports tabular and text data

LLM testing platform for quality and safety (RAG and adversarial testing)

AI agent testing for multi-step workflow validation

Case study database of ML and LLM applications