We envision a future where AI systems empower humanity to innovate safely and securely, transforming industries while guarding against emergent risks. Our mission is to pioneer a new frontier in AI security by embedding rigorous, AI-specific defense mechanisms that protect the integrity and reliability of AI applications at scale.

By harnessing cutting-edge AI-native technology and continuous adversarial intelligence, we create platforms that anticipate and neutralize threats uniquely targeting generative AI and large language models. We are building the foundation for enterprises worldwide to deploy AI with unwavering trust and resilience, enabling technologies that are not only powerful but responsibly secure.

Our approach integrates advanced research and real-world applications to redefine how AI safety is conceived and delivered — ensuring AI's transformative potential unfolds within a framework of uncompromised security and forward-thinking innovation.

Our Review

When we first encountered Lakera, we'll admit we were skeptical. Another AI security startup? But after digging into what they've built, we're genuinely impressed by their approach to a problem most companies are just starting to understand.

The Problem They Actually Solved

Here's what caught our attention: while everyone else was trying to bolt traditional cybersecurity onto AI systems, Lakera's founders—ex-Google, Meta, and aerospace engineers—built something from scratch. They recognized that prompt injections and AI-specific attacks aren't just theoretical risks anymore. They're happening right now, and traditional firewalls can't stop them.

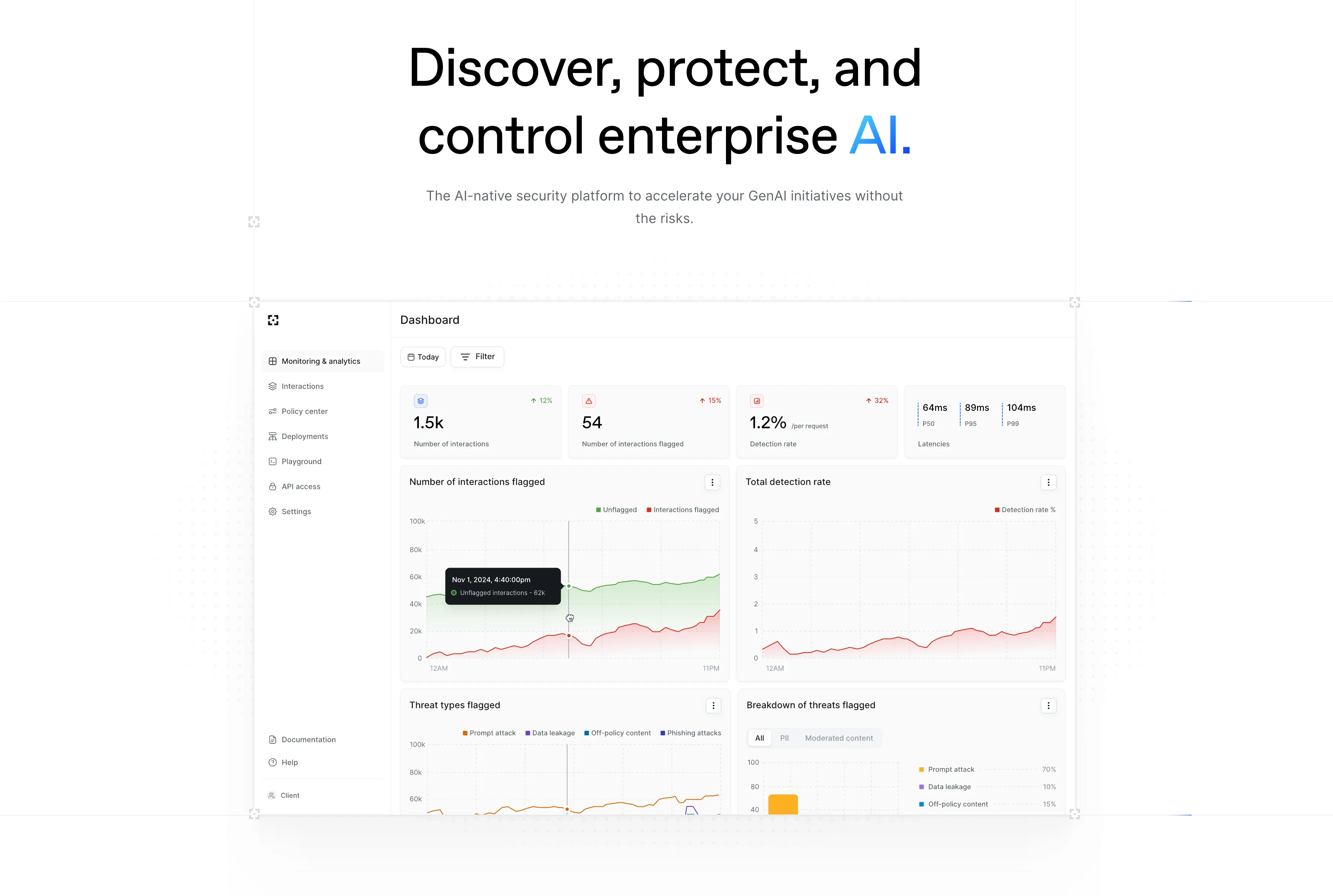

Their flagship product, Lakera Guard, acts like a "Secret Service for your LLMs." It inspects every prompt and response in real-time, catching threats before they cause damage. The sub-50ms latency is particularly impressive—that's fast enough that users won't even notice it's there.

What Makes Gandalf Brilliant

But here's where Lakera gets really clever: they created Gandalf, an educational platform that's essentially a game where you try to trick AI into revealing secrets. Over a million people have played it, generating more than 80 million attack patterns in the process.

This isn't just marketing genius (though it is that). It's continuous threat intelligence gathering at scale. Every failed attack attempt teaches their system something new about how bad actors might try to exploit AI systems.

The Check Point Validation

In September 2025, Check Point Software Technologies announced they're acquiring Lakera—expected to close in Q4 2025. That's a massive validation from one of the biggest names in cybersecurity. Check Point plans to make Lakera the foundation of their Global Center of Excellence for AI Security.

For a startup founded in 2021, that's lightning-fast recognition in an industry that typically moves slowly. It tells us that enterprise demand for AI security is exploding, and Lakera was ready for it.

Who Should Care

If you're building AI applications for enterprise customers—especially in finance, healthcare, or education—Lakera's worth a serious look. Their detection rates above 98% with false positives below 0.5% are the kind of numbers that make compliance teams happy.

The platform might be overkill for small startups just experimenting with AI, but for any company where AI failures could mean real business impact, it's becoming table stakes. We suspect that within a year or two, having AI security like this won't be optional—it'll be expected.

Feature

AI-native runtime protection platform for LLMs

Real-time threat interception with sub-50ms latency

Over 100 language support

Generative AI red teaming and vulnerability management

PII detection for privacy compliance

Adversarial AI network-powered continuous threat intelligence

Gamified AI security education platform