Cleanlab envisions a future where AI systems operate safely and accurately even when fed with real-world, imperfect data. By transforming how organizations clean and curate data, Cleanlab empowers the creation of AI applications that are not only reliable but also aligned with strategic business goals.

Arising from pioneering MIT research, Cleanlab employs innovative technologies that detect and correct labeling errors, thereby elevating the foundation upon which AI learns. Their integrated tools streamline data quality assurance across diverse data types, making trustworthy AI accessible at scale.

At its core, Cleanlab is dedicated to bridging the gap between messy data and dependable AI, fueling a new era of intelligent solutions that can be deployed confidently across industries and use cases.

Our Review

We've been keeping an eye on Cleanlab for a while now, and frankly, their origin story had us hooked from the start. When Curtis Northcutt discovered major labeling errors in ImageNet—one of AI's most foundational datasets—during his MIT PhD work, it wasn't just an "oops" moment. It was the spark that led to something genuinely useful for anyone wrestling with messy, real-world data.

The Problem They Actually Solve

Here's what we love about Cleanlab's approach: they're not trying to reinvent AI from scratch. Instead, they're fixing one of the industry's most persistent headaches—garbage data leading to garbage results. Their core insight is brilliantly simple: use AI to clean up the data that trains other AI systems.

Cleanlab Studio automates the tedious work of data curation and quality control across images, text, and structured datasets. We've seen plenty of "data cleaning" tools before, but most require you to be a data scientist to use them effectively. Cleanlab's no-code interface changes that game entirely.

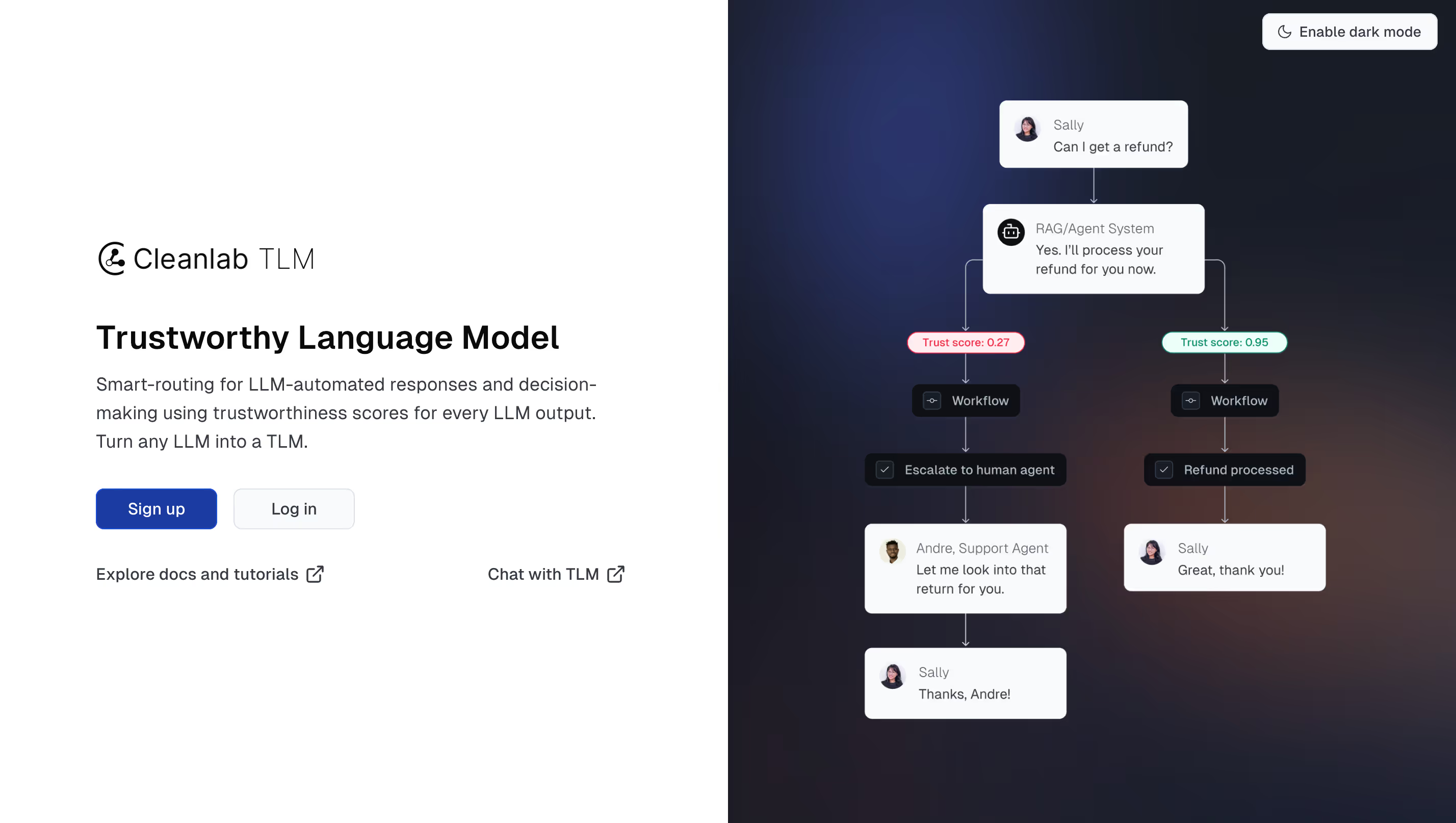

The TLM Twist That Got Our Attention

What really caught our eye was their Trustworthy Language Model (TLM). While everyone's talking about LLM hallucinations, Cleanlab actually built something to address them—a trustworthiness score for AI outputs. It's like having a built-in fact-checker that tells you when to trust what your AI is saying.

This isn't just clever engineering; it's addressing a real business problem. Companies want to deploy AI, but they're terrified of the reliability issues. TLM gives them a safety net.

Who This Really Works For

We think Cleanlab hits a sweet spot in the market. They're not just for AI researchers or Fortune 500 companies with massive data teams. Their tools work for startups trying to get AI projects off the ground, consultants who need reliable results for clients, and mid-sized companies that can't afford to hire a dozen data scientists.

The $25 million Series A they raised in late 2023 from Menlo Ventures and others suggests investors see the same opportunity we do. When Databricks joins your funding round, that's usually a good sign you're solving a problem that scales.

Bottom line: Cleanlab isn't flashy, but they're tackling the unglamorous work that makes AI actually reliable. In a world full of AI hype, that's refreshingly practical.

Feature

Automates data curation, annotation, and quality control

Supports images, text, and structured data

No-code interface and Python APIs

Trustworthy Language Model providing trustworthiness scores to LLM outputs

Helps identify and fix labeling errors in datasets