We envision a future where AI adoption is empowered by unwavering trust and robust responsibility, enabling organizations worldwide to harness the potential of artificial intelligence with confidence and integrity.

Our mission is to bridge the divide between rapid AI innovation and the intricate requirements of ethical governance and regulatory compliance, crafting a landscape where AI systems serve society safely and transparently.

Through pioneering governance platforms and strategic partnerships, we are building the infrastructure for accountable AI, ensuring every enterprise can navigate the complexities of AI risk and compliance to foster trustworthy technological advancement.

Our Review

We'll be honest—when we first heard about Trustible, we thought "great, another AI compliance tool." But after digging into what this Arlington-based startup is actually building, we found ourselves genuinely impressed by their approach to a problem that's keeping CTOs awake at night.

Founded in 2023, Trustible isn't just another software company trying to capitalize on AI hype. They've structured themselves as a Benefit Corporation (think Patagonia for AI governance), which tells us they're serious about balancing profit with purpose. That's not just good marketing—it's a legal commitment to social impact.

What Actually Sets Them Apart

Here's where Trustible gets clever: instead of building yet another standalone compliance tool, they've created a platform that actually integrates with your existing AI/ML workflows. We've seen too many governance solutions that require teams to completely overhaul their processes, and frankly, most organizations just won't do it.

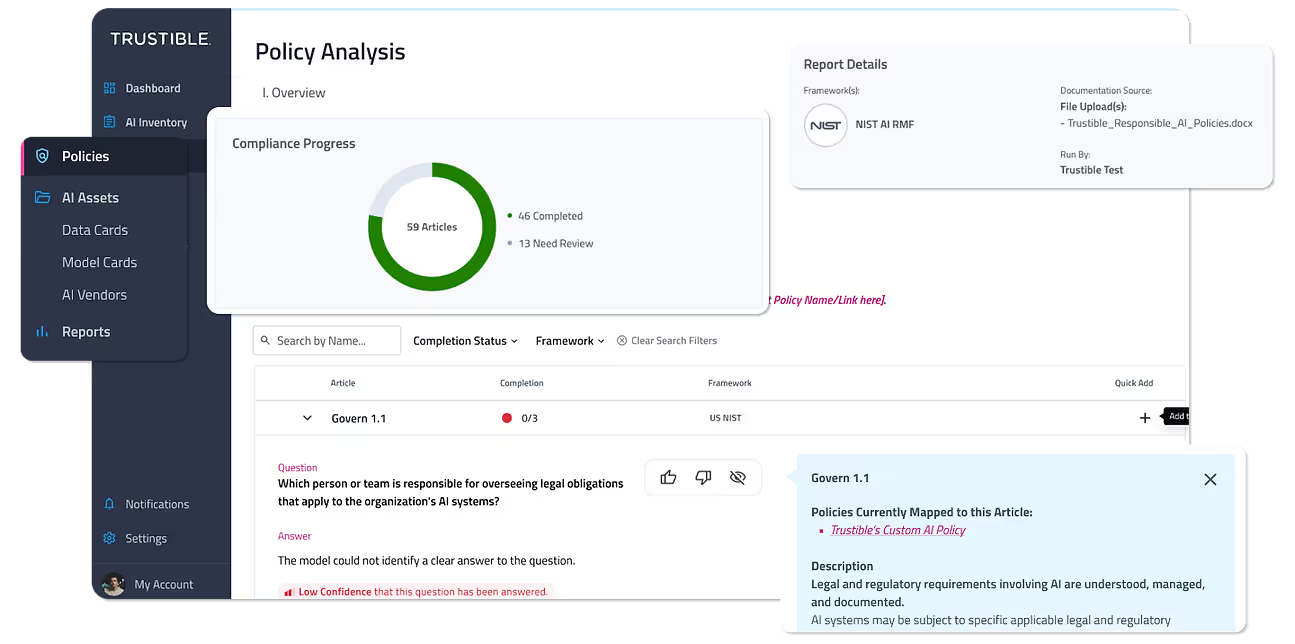

Their centralized inventory approach is particularly smart. Think of it as a "system of record" for all your AI initiatives—models, data sources, vendors, the works. For large enterprises juggling dozens or hundreds of AI projects, this visibility alone is worth the price of admission.

The Insurance Angle Is Brilliant

This is where we got excited: Trustible partnered with Armilla AI to offer actual insurance coverage for AI model errors and regulatory violations. Traditional cyber insurance doesn't cover AI risks, leaving companies exposed to potentially massive liabilities. It's a gap in the market that's been hiding in plain sight.

The fact that they're mapping compliance to frameworks like the EU AI Act and NIST AI RMF shows they understand the regulatory landscape isn't just American. With 87% of their customers operating internationally, that global perspective isn't optional—it's essential.

Who Should Pay Attention

If you're a Fortune 500 company or operate in heavily regulated industries, Trustible deserves a serious look. Their customer base speaks for itself—a significant portion of Fortune 500 companies are already using the platform, just one year after founding.

Government agencies should also take note, especially with their Carahsoft partnership opening doors to public sector sales. We expect to see more adoption here as federal AI regulations continue to evolve.

The $4.6 million Series Seed round gives them runway to build, but more importantly, it validates that smart money sees the same opportunity we do. In a world where AI governance is shifting from "nice to have" to "business critical," Trustible seems positioned to ride that wave.

Feature

Centralized Inventory and Visibility of AI assets

Risk Assessment and Mitigation workflows

Regulatory Mapping to frameworks like EU AI Act, NIST AI RMF, ISO 42001

Automated Documentation and Reporting tools

Integration with existing AI/ML systems

AI Insurance partnership for model errors and regulatory violations