At TrustLab, we envision a safer digital future where every interaction on social platforms, marketplaces, and mobile apps is protected by intelligent, adaptive safety technologies. Our purpose is to empower organizations with the tools and insights needed to foster trust and mitigate harm in ever-evolving online communities.

We harness the power of cutting-edge AI integrated with human expertise to build dynamic solutions that address complex safety challenges across diverse content types and platforms. By enabling smarter, scalable moderation and policy enforcement, we are redefining how trust is maintained in the digital age.

Driven by deep industry experience and innovative research, TrustLab leads the way in shaping a transparent, secure online ecosystem where users and businesses can thrive confidently and responsibly.

Our Review

When we first encountered TrustLab, we were struck by something refreshing: here's a company that actually knows what it's talking about. Founded by veterans from Google, YouTube, Reddit, and TikTok with over 40 years of combined Trust & Safety experience, this isn't another startup throwing around AI buzzwords. These folks have been in the trenches of content moderation at scale.

The timing couldn't be better. As online platforms grapple with everything from deepfakes to coordinated harassment campaigns, TrustLab emerged in 2020 with a clear mission: make the internet safer through smarter technology and hard-earned expertise.

What Caught Our Attention

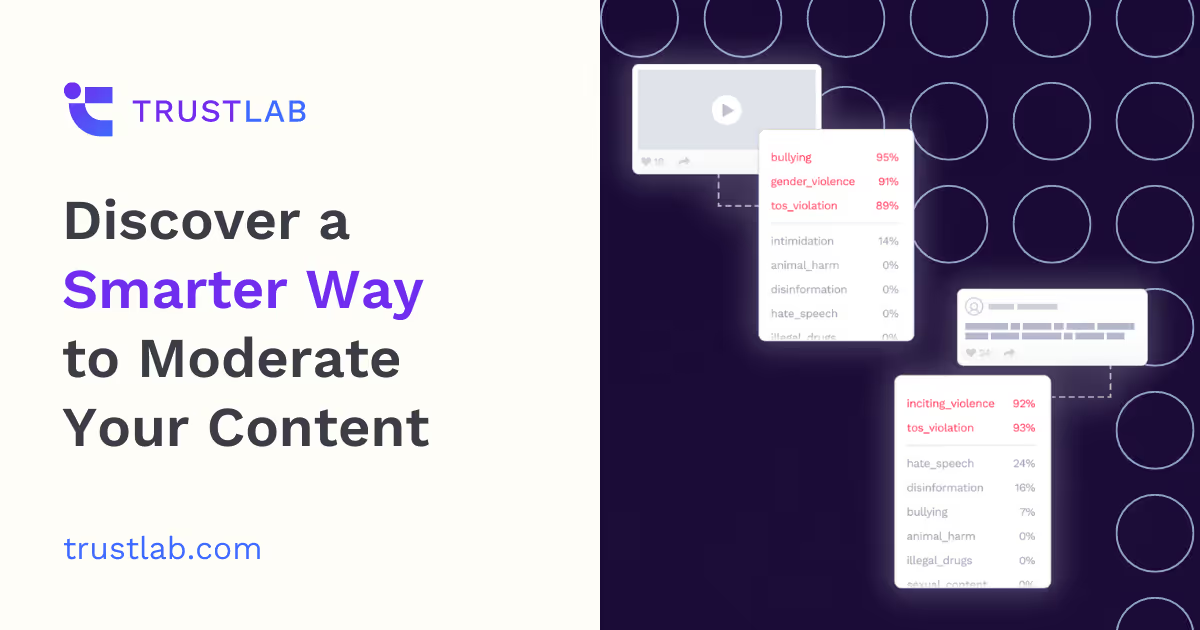

TrustLab's flagship product, ModerateAI, impressed us with its practical approach to content moderation. Instead of trying to replace human judgment entirely, it enhances it. The system handles text, images, video, and mixed content—which is crucial since bad actors don't limit themselves to one format.

What we found particularly smart is how they've positioned themselves as an external solution provider. Many companies struggle with building Trust & Safety capabilities from scratch, and TrustLab fills that gap with battle-tested expertise.

The European Commission Validation

Here's where things get interesting. In 2023, the European Commission selected TrustLab to conduct a major study on misinformation across social platforms in Poland, Slovakia, and Spain. That's not the kind of contract you win without serious credibility.

This study represents more than just a business milestone—it's a benchmark for how we'll measure and monitor online misinformation going forward. When government bodies trust you with research that shapes policy, you're clearly doing something right.

Who Should Pay Attention

We see TrustLab as particularly valuable for two types of organizations. First, smaller platforms that need enterprise-grade safety tools but can't afford to build them in-house. Second, larger companies looking to augment their existing teams with specialized expertise.

Their client roster—including Outdoorsy, Doximity, and Iterable—shows they can adapt to different industries and scale requirements. That versatility is harder to achieve than it sounds in the Trust & Safety space.

The media attention from outlets like The Verge, Forbes, and BBC suggests the industry is taking notice. In a field where trust is literally in the name, that external validation matters.

Feature

ModerateAI for AI-powered content moderation

Combines human expertise with AI efficiency

Supports multiple content modalities: text, images, video

Automates identification and management of problematic content

Builds and enforces Trust & Safety policies