🎤 Full Interview: Nir Gazit, Co-founder & CEO @ Traceloop

"We won’t rest until AI developers can have the same confidence and discipline that has existed in software engineering for years."

Founder Story & Vision

Who they are & what they’re building

Nir Gazit is the co-founder and CEO of Traceloop, a YC-backed startup building the observability and evaluation stack for LLM applications. Before Traceloop, he led ML engineering at Google and served as Chief Architect at Fiverr. He teamed up with Gal Kleinman (also ex-Fiverr) after realizing how difficult it was to debug and improve LLM agents in production. Their early GPT-3 experiments worked… until they didn’t — and they had no idea why. That led to Traceloop.

Why now & what’s the big bet

2025 is the year of GenAI production. Enterprises like Miro, Cisco, and IBM are finally putting LLM agents to work — in support, code gen, and internal data tasks. But there’s a critical gap: you can’t improve what you can’t measure. Nir’s big bet is that AI apps need the same observability tooling we use for traditional software — tracing, metrics, alerts, evals — or else risk hallucinations, UX fails, and trust erosion. With Traceloop, he wants to close the feedback loop between real-world usage and model improvement.

🧩 Real-World Use Cases

How it works in the wild

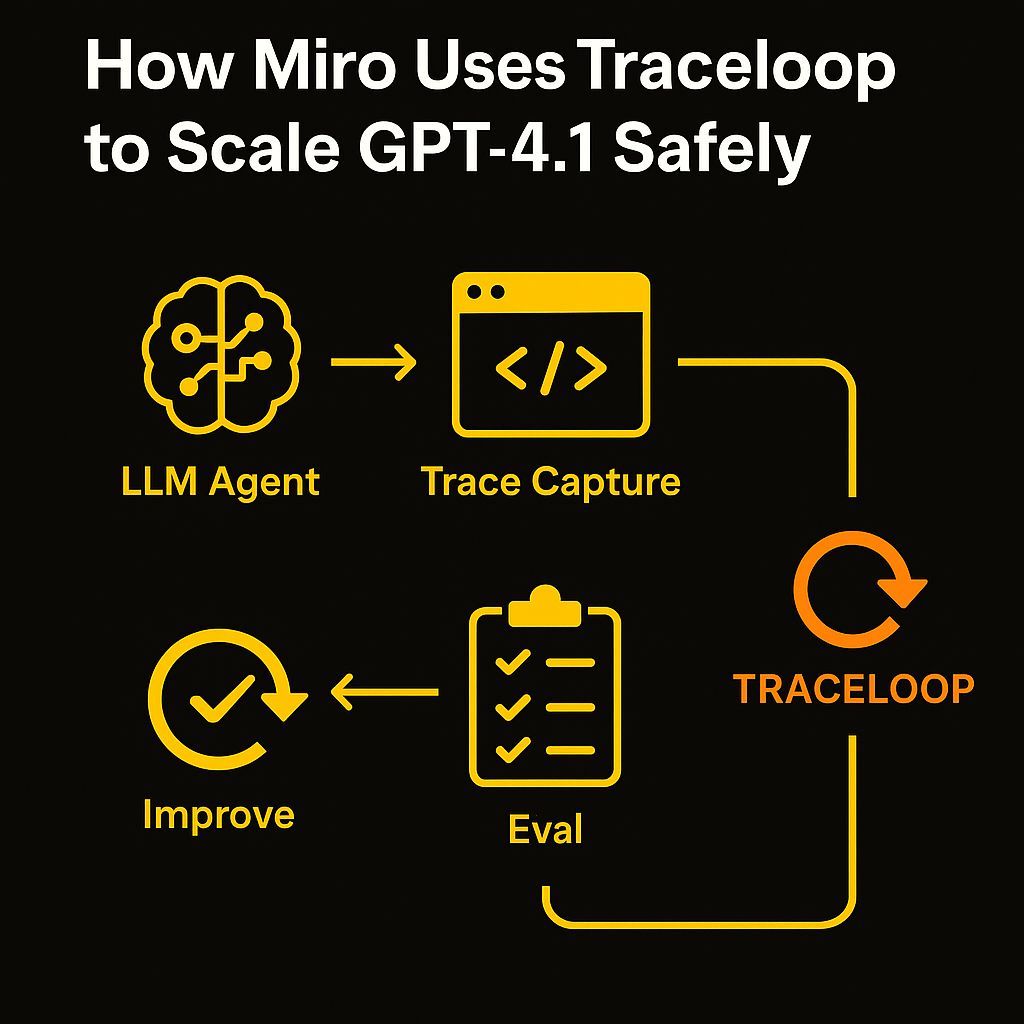

Miro uses Traceloop to monitor GPT-4.1 performance inside its collaborative whiteboard platform. They needed safe rollout of an LLM-powered assistant that helps users plan, draw, and generate frameworks — without “going rogue.” Traceloop’s platform gave them real-time insights into hallucination rates, latency, and tool usage breakdowns, enabling confident deployment at scale.

IBM and Cisco use Traceloop to trace complex agent workflows — where LLMs use tools, call APIs, and make decisions. Traceloop helps flag tool call failures, drift in behavior, and latent costs — before users notice.

Startups tap into OpenLLMetry — Traceloop’s open-source SDK — to collect token-level traces, eval scores, and prompt versions. It’s everything they need, with zero platform lock-in.

What you’ll learn:

How Nir navigated the shift from LLM playgrounds to production systems

Framework for closing the loop between LLM usage, tracing, and automated evals

Real tactics behind open-source-led growth (500K+ downloads/month)

Lessons on founding during platform shifts (and pitching a technical tool to non-technical buyers)

How Traceloop is solving the “black box” problem of LLM agents in production

Some Takeaways:

Treat prompts like code: version, trace, test, and improve.

Observability isn’t optional — it's your first defense against hallucinations and drift.

Open-source + product-led growth can build traction fast if devs find real value.

Traceloop’s secret weapon: agent-first tracing, not just logging.

The next frontier: auto-improving agents using real-world data as feedback.

In this episode, we cover:

00:00 – Why Traceloop exists: from GPT chaos to clarity

03:00 – From Google & Fiverr to co-founding a YC startup

06:00 – LLMs fail silently: real-world horror stories

09:00 – OpenLLMetry, 2M+ downloads & go-to-market via open source

12:30 – Enterprise GenAI adoption: why 2025 is the turning point

16:00 – Prompt engineering is fake, AI coding is real (and weird)

20:00 – Junior vs. senior engineers in the age of AI

24:00 – AGI, AI limitations & what’s realistically possible

28:00 – Traceloop’s vision: auto-improving agents & full feedback loop

33:00 – Startup lessons, YC advice, hiring culture & future of work in AI

For inquiries about sponsoring the podcast, email david@thehomabase.ai

Referenced in the Episode:

OpenLLMetry – Traceloop’s open-source SDK for LLM tracing

Traceloop Platform – Full observability stack for LLMs and agents

Cursor

Claude by Anthropic

OpenTelemetry – The open standard Traceloop is built on

Y Combinator (W23) – Startup accelerator backing Traceloop

→ yc.com

“Software 2.0” by Andrej Karpathy

Find Case Studies of all other episodes here.